Dear Reader,

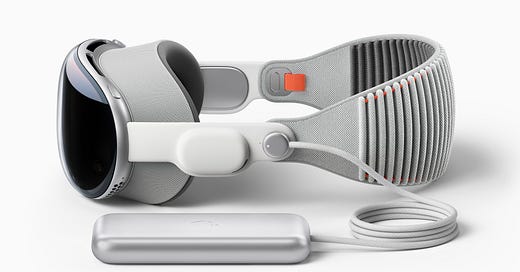

After many years of speculation and waiting, Apple have finally unveiled Vision Pro, their first “VR headset”. Except it’s not a VR headset; it’s a Spatial Computer. Whilst this might just feel like marketing language, I think it definitely helps frame how new this type of product really is.

This isn’t something that is tethered to your iPhone or your Mac; it’s a full computer running with an M2 chip, the same as the most recent Mac laptops and desktops. It also isn’t VR, that’s just a small use case within it. Instead, the default mode is Augmented Reality showing you a window based interface within you own world complete with some truly astonishing effects such as shadows, reflections, and realtime blurring to make it feel grounded in the real world. You can go into a full VR mode but this seems likely to be the exception rather than the rule.

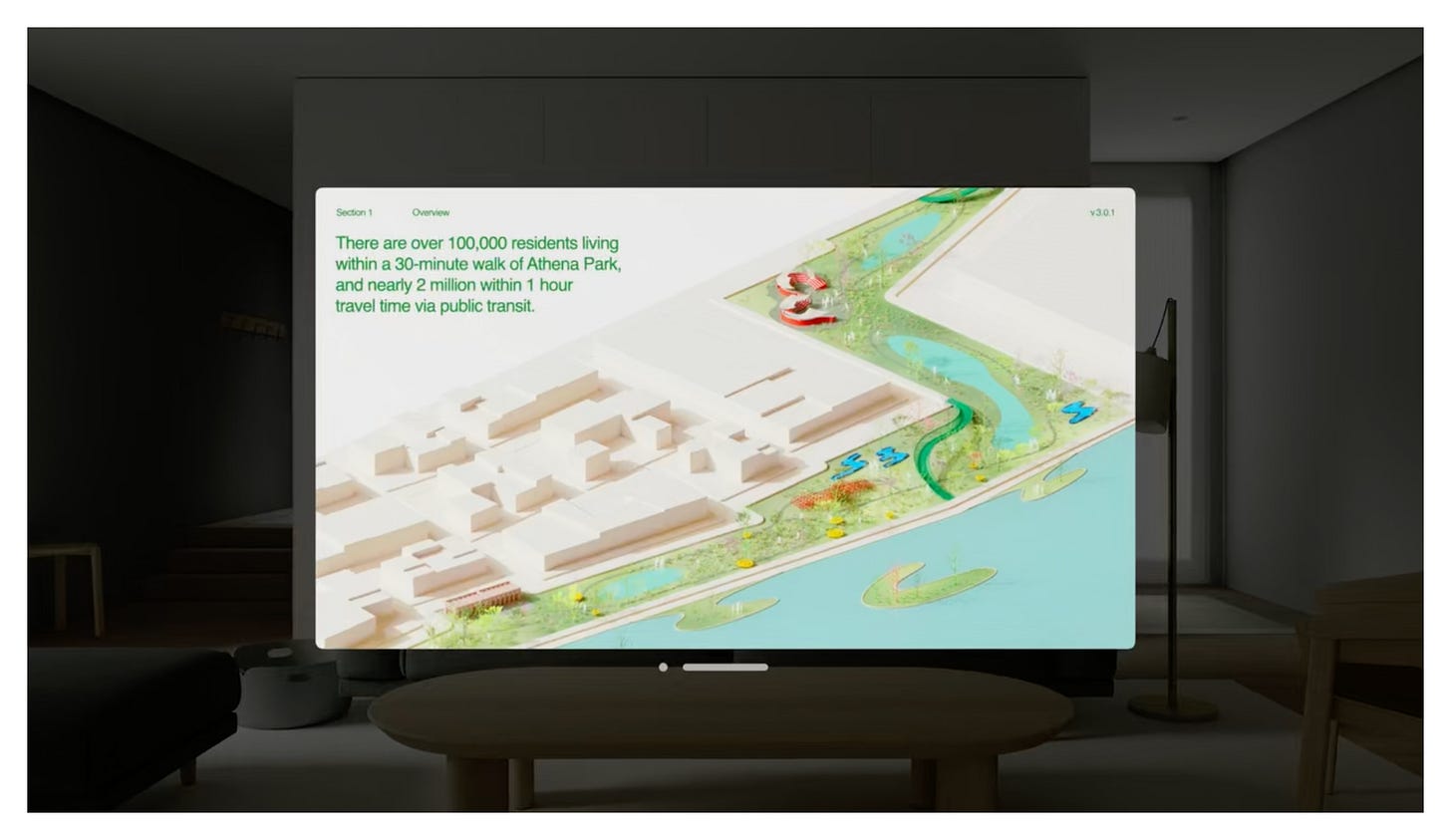

I was particularly drawn to this demo of the Keynote app in the Principles of spatial design talk yesterday:

In Keynote, the app opens in a window. But when it's time to play the slideshow, we use dimming to bring focus to this presentation. Dimming is a simple way to create contrast between your content and people's surroundings without taking them out of their space. When it's time to rehearse the presentation, we can bring people onto the big stage fully immersing them within the theater1. Life-size experiences like these require more room, so Keynote is now in a Full Space and other apps are hidden.

You can use the app in a window placed in the real world but when you start to play the presentation the world gets dimmed to focus on the content. This is insane!

That a device can not only insert content into the real world but then selectively remove bits or have a half-way mode where it’s dimmed is an astonishing feat of engineering. Another impressive demonstration was a mindfulness exercise in which the room is dimmed as you view a pulsating sphere; as the sphere expands with your intake of breath, the room disappears into darkness only to return as you breathe out. Incredible.

Another facet that I find utterly fascinating is the way presence is managed with EyeSight:

An outward display reveals your eyes while wearing Vision Pro, letting others know when you are using apps or fully immersed.

It had been rumoured that there was an external display to show your facial expression but this had been blown off for being unnecessary and a drain on a presumably constrained battery. It turns out that it is very real and, to my mind, is one of the most well thought out features of the device. In fact, it was referred to in the Keynote as a “foundational design goal”:

Another foundational design goal for Vision Pro was that you're never isolated from the people around you. You can see them, and they can see you. Your eyes are a critical indicator of connection and emotion, so Vision Pro displays your eyes when someone is nearby. Not only does EyeSight reveal your eyes, if provides important cues to others about what you're focused on.

Vision Pro has a curved OLED panel with a lenticular lens creating a faux depth effect so your eyes look correct no matter which angle somebody is looking at you from. I love that your eyes get obscured if you’re looking at windows within Augmented Reality and that the entire display gets covered in a fog when you’re within a full screen Virtual Reality experience. It makes it incredibly clear to anyone around you as to whether you can see them or not.

This goes the other way in that if somebody comes close to you whilst you have the headset on a blended cutout appears so you can see them. Whilst switching on external cameras on a VR headset so you can see has been around for a while2 this is a whole new level.

I saw a mockup a few days before WWDC of Tim Cook wearing a VR headset with his Memoji on the front. This seemed semi-plausible to me as I didn’t think there would be enough cameras internally to replicate the user’s eyes. Memoji also seemed like the way one would appear on FaceTime calls. Instead, Vision Pro scans your face with it’s spatial camera during setup and then creates an uncanny valley 3D model of you which is then animated. This is the bit that looks the most odd to me but I’m excited to see how well it will work in practice. Regardless, it’s telling that Apple have gone for a real world look rather than a cartoon one clearly pitching this product as a professional device rather than a novelty.

It was rumoured for a long time that this headset was going to be around $3000. Some expected it to be far cheaper in a twist like the original iPad announcement3 but instead it’s actually a bit more starting at $3500. I expect that “starting at” bit is due to it either having multiple storage capacities or to cover those of us that need to buy the magnetic prescription lenses which I fully expect to be at least $250 per lens. Many look at that and think this is a ridiculous price for a VR headset, and they’d be right; but this isn’t a VR headset. I personally think $3500 is an absolute steal for this level of hardware combining an M2 computer with over two 4K screens worth of pixels along with seamless blending of virtual and actual reality with no hand controllers required4. It’s incredibly reassuring that the hands-on reviews coming out of WWDC are all saying it works as shown; the keynote was not a CGI mockup or aspirational demo but an actual, real device.

Whilst there are some videos from WWDC showing off various facets of Vision Pro and how to develop apps for it, a full SDK won’t be available until later this month. In terms of developing for the headset, there is a simulator (which looks pretty good) but you can also go to six different locations around the world to test your apps or you can send a build to Apple and they’ll try it out and let you know if they run into any problems. Apple has also announced that developer kits will be available with more details coming soon. I’ll certainly be aiming to get one.

The age of spatial computing has begun… and I cannot wait!

— Ben

Contents

What’s New at WWDC 2023

The Spatial Audio Database

The Lossless Audio Database

ChatGPT as an Intern Developer

Recommendations

Roadmap

What’s New at WWDC 2023?

In the last issue, I mentioned in my intro:

In the indie development space, the launch of a new version of iOS is the time that getting a coveted featured spot from Apple or the wider blogosphere is more likely if you can add some new feature to your app related to the iOS update. The problem with this is that you typically get about 2 months to come up with this idea and incorporate it into your app whilst dealing with the multitude of bugs in the beta software and the lack of concrete documentation. It can be a stressful time!

Fortunately it’s not that much of a stressful time this year as there isn’t much in the software updates that I’m keen to explore. There are certainly a couple of interesting tidbits but no major new feature that will get me rushing to get something into an app in time for the assumed September release of iOS 17.

Rather than building something, I thought I’d run through a few of the things that have caught my eye from the Keynote, State of the Union, updated documentation, and actually using the new software.

iOS 17

I always find it hard to predict what new features are going to appear in iOS as the low hanging fruit has long since gone. There are very few areas I look at and think “this needs changing” and there isn’t anything in this release that has made me go “wow, I wish I’d thought of that”. This is not necessarily a bad thing; I’d much rather have ten or twenty items minor improvements than one big flashy feature.

Call Screen Personalisation - When this was first showcased in the keynote it looked like a user could create a custom call screen that would then be used on other users devices. This obviously has some bad use cases and wasn’t something I was keen on having inflicted upon my device. Fortunately it’s not quite as hard cut as it looked. The system piggybacks on the existing contact card customisation (where you change your avatar and it suggests to people with you in their contacts that they accept the update) and you can create custom call screens for all of your contacts if you want to. The UI is pretty much identical to the lock screen stuff that was added in iOS 16.

Live Voicemail - True story: Visual Voicemail was the feature that convinced me to buy an iPhone when they first launched. This seems like a logical next step and doesn’t involve carrier support which Visual Voicemail still requires.

Messages - A lot of “sherlocking” in the messages app as Sticker Drop gets it’s raison d'être removed and the Check In feature destroys an entire category of apps. My personal favourite is audio message transcription; my wife often leaves me audio messages (which I despise - they’re so interruptive) so having them automatically transcribed is a big win for me even though it comes at the expense of Scusi on macOS which I’ve been using up until now. I’ve also noticed that the location part for family members has been redesigned so iOS now shows you not only where they are but how long it would take you to get to them.

Live Video reactions - The first client that uses the thumbs up reaction to produce fireworks in a meeting with me is going to get hung up on.

Standby - I can see this being really useful for a lot of people. Unfortunately, I have a MagSafe Duo on my bedside table and this feature only works when the device is standing up in landscape mode. I also don’t really want this information in my bedroom, especially when I have an Apple Watch on my wrist whilst I sleep with the screen turned off. The stand that they’re showing off in all the promo art for this is not available yet but presumably will be in September. Personally I see this as a preview of what a HomePod with a screen is going to look like…

Interactive Widgets - Bizarre that this hasn’t come sooner (especially given that Apple Music has an interactive widget on Android) but an easy win. I can see the benefit for the Things widgets that I use and I have a client that is excited to get them into their reminders app but I can’t say I’m overly excited about them.

AirDrop - The NameDrop feature is cute (I had a pitch given to me for something like this back in the iPhoneOS 3.0 days) but the ability to AirDrop and have it continue over the internet if you step away is the more useful feature to me.

Journal - I haven’t been able to play with this as it isn’t coming until later this year but from the screenshots provided it doesn’t look like a big game changer for these types of apps. Rather than killing off apps like Day One I suspect this will actually boost downloads for more powerful journalling apps5.

Keyboard - The new autocorrect feature works as advertised. I’ve definitely noticed an improvement over the past couple of days.

Autofill verification codes - You can now get autofill from email but even better the system can now delete those SMS and emails after the code has been used. A beautiful feature!

Music - Very little has changed in the Music app except the toolbar at the bottom has got a bit of a Vision Pro vibe added to it, animated album covers (available in my Apple Music Artwork Finder) now show during fullscreen playback, and there is a collaborative playlist feature coming later in the year. A bit of a disappointment that Spatial Audio and Lossless Audio still don’t have good discovery tools but that’ll keep me in business for another year 😂

PDFs - Not what I was expecting, but PDFs can now be autofilled. When this was first demoed I assumed it was for PDFs with actual coded forms but it became clear it also worked on scanned documents and is instead using all the fancy Text and Vision APIs. Whilst this was demoed in the iPad section of the keynote, it’s available on iPhone as well.

iPadOS 17

If iOS doesn’t have much going on this year, then iPadOS has even less. Apple seem to have taken a break this year from trying to create a new way to multitask and instead we’re left with two new features which both appeared in iOS in previous years:

Lock Screen - Everyone knew it was coming as there was a way to trick it into appearing in one of the iOS 16 betas. It’s got a new size of widget but there isn’t anything particularly exciting here in my opinion.

Health - 9 years after appearing on iPhone, the iPad now gets access to the Health app. Hopefully we’ll see it on macOS before 2042!

macOS Sonoma

When your headline feature is “new screensavers” it’s safe to say we’re in what people still refer to as a “Snow Leopard” year of bug fixes. The large majority of iOS features make their way across but there is also new support for widgets:

These can now be added to the desktop along with a nice animation to fade them out when you’re focussing on windows on top. The API for this is also very easy to implement and they get the interactivity that was added to iOS / iPadOS.

More impressive was that you can add iPhone widgets to your Mac without installing the relevant app! I was expecting this to be an Apple Silicon “install an iOS app and its widgets will magically work” but nope, they’re widgets updated over the network thanks to the technology behind hand-off. Really clever and might make me more likely to add a couple of widgets to my desktop.

watchOS 10

It was rumoured that watchOS 10 was going to get a big redesign and so it has come to pass… sort of. Apple are calling them “comprehensive app redesigns” but they aren’t radically different. That is aside from the Stopwatch app which now has a full white background completely killing the illusion of the watch fading into the bezels; it looks awful. Full colour backgrounds seem to be the thing for several apps and I just can’t get behind it. Apple’s marketing uses the phrase “apps that leave no corner untouched” and they’re right; the UI is touching all the corners 🤮

Aside from that there was a Snoopy watch face6 and some improvements for cyclists and hiking but not a whole lot else. Hopefully some new hardware in September will unlock some further improvements!

Other products

tvOS - There wasn’t enough for a dedicated segment but one new feature tvOS did get was the ability to make FaceTime calls thanks to Continuity Camera with an iPhone or iPad. I really like this and again think it’s a play for when a HomePod gets a screen at some point. The ability to “find” your Siri Remote is also something that has been on wishlists for a while and amazingly doesn’t require new hardware.

CarPlay - I’ve noticed a few bits of UI tweaking but there is also the option to let other iPhone users in your car set the music without having to use your phone. I come from of a philosophy of “who’s driving gets to choose the music” so I’ll pass on that 🤣

AirPods - A couple of nice feature for AirPods Pro 2 including Adaptive Audio (to seamlessly transition between noise cancellation and transparency mode) and Conversation Awareness (auto-ducking of audio when someone speaks to you). Nice to see AirPods getting software updates between hardware refreshes for features that could easily have been held back.

AirTags - Not announced in the keynote but it surely would have got a standing ovation if it was; AirTags can now be shared with your family. No longer will it look like my wife is stalking me when I take her car keys 😂

Frameworks

Swift Macros - I don’t like abstracting things away with magic, so it’s very clever that Xcode can expand macros in place so you can clearly see how they work. The example during the State of the Union of converting a completion-based async operation into an async / await one was particularly interesting but the new @Observable macro is the one that will tidy up a huge swathe of my recent SwiftUI code.

SwiftUI Animations - Speaking of things that are “magic”, SwiftUI was always very good at magically animating things… until it wasn’t. Too often did something animate not quite the right way. With the new keyframe animations and other assorted goodies, I’m hoping I can get some of my SwiftUI interfaces to animate a bit nicer now!

Animated SF Symbols - I think these could get overdone very quickly but I can definitely envision a few places where an animated icon would work well.

Swift Charts - I really liked the first version of Charts last year but my two wishes were interactivity and pie charts. These have both been added!

Swift Data - I’ve been burned by CoreData too many times and switched to using Realm over a decade ago. However, this does look really, really nice. I’m wary of jumping into anything new for what is the absolute foundation of an app but I can definitely see myself testing the waters with this framework later in the year.

TipKit - Easily my favourite new addition to the frameworks this year. Clients are always asking to add these little prompts within apps so being able to do it at the system level will be a huge help. I also notice that Apple are using this themselves throughout iOS 17 such as showing you how to undo or edit in Messages.

StoreKit Merchandise Views - I’m very impressed with the views Apple have created to let you easil y create paywalls within your app. It’s almost like they’re looking for a way to keep developers using in-app payments 🤔

Hardware

15” MacBook Air - I used to use an M1 Max 16” MacBook Pro but the thickness of it (and some issues with fans) meant I swapped it in for an M2 13” MacBook Air and a bunch of store credit which may or may not have disappeared on Apple Watch bands 🫢 This machine seems even better; a larger screen without the added thickness and frankly unnecessary power of the Pro. I can imagine I’ll be getting one of these in a later generation.

Mac Studio 2nd Gen - I’ve been using the M1 Ultra version of the Mac Studio since it launched and it is far and away the best computer I’ve ever owned. This is an incredibly solid upgrade with the same pricing. What’s not to like?

Apple Silicon Mac Pro - It finally appears and uses the same M2 Ultra as the Mac Studio. The only difference is that it costs $3k more than the Studio because it has a huge case and the power to add PCI-E expansion cards for very pro workflows. As it’s Apple Silicon, the RAM and SSD are set into the chip so cannot be changed after the fact. If you’re the target market for this device then you’ll know it, but the Mac Studio is likely the replacement for most Intel Mac Pro users. I think I’d rather have two Vision Pro’s 😉

That’s pretty much it for WWDC so far. I expect there may be a few more tidbits as the final sessions are released but for now all eyes are, quite literally, on Vision Pro.

The Spatial Audio Database

As many of you will already know, I’ve been running my own database of Apple’s Spatial Audio tracks in order to power the Music Library Tracker app and my Spatial Audio Finder website. Up until recently, I had also been posting any new tracks that were upgraded to Spatial Audio on the @NewSpatialAudio Twitter account.

That was until 19th May when my API key was revoked just as I went on holiday 🤦🏻♂ The reason for this revocation was “violating Twitter rules and policies” but no concrete reason has been given and I can’t see anything in there that I’m falling foul of nor an easy way to appeal. This is not a huge surprise though; since Elon Musk took over, Twitter have been aggressively trying to get developers to pay for API access which is something I’m not prepared to do as it would likely cost 100s of dollars per month.

In the back of my mind, I had long been planning for this eventuality but not so much that I’d actually written any code before the final tweet was sent. My plan was always to have a website where you could view the latest upgraded tracks but also provide a way for developers and pro users to access that data.

This is now a reality with the launch of The Spatial Audio Database accessible at spatialaudiodb.com:

On the homepage you can view the latest tracks that have been updated and filter them by either Dolby Atmos or Dolby Audio. Each track can be clicked to take you directly to Apple Music.

On the exports page, you can find RSS feeds for all Spatial Audio, just Dolby Atmos, and just Dolby Audio. These feeds work in real time and will give you the artwork, album title, artist name, and then a list of tracks that have been upgraded.

Going further, there are also daily CSV exports which are generated each morning to detail every single track that was upgraded on that day.

Whilst the database has over 10 million tracks in it and has been built up over the past year, I wasn’t storing the date in which I noticed tracks had been upgraded. That information is stored now but that’s why exports only start from 1st June 2023.

In the future I’ll be migrating the existing Spatial Audio Finder to this website and adding support to search by album and track as well as by artist giving you the ability to search and browse the entire data set in a way that just isn’t possible on Apple Music.

Hopefully those of you that found the @NewSpatialAudio Twitter account useful for surfacing upgraded tracks will like this new website and find it an improvement! If you have any feedback or suggestions, please get in touch.

The Lossless Audio Database

In the last issue I mentioned that Lossless Audio support was going to be coming to the next release of Music Library Tracker. To do that, I’d obviously need a database containing all of that information so… all that stuff I just mentioned for The Spatial Audio Database? I did it for Lossless Audio as well!

You get the same list of recent tracks, RSS feeds, and CSV exports but this time for both Hi-Res Lossless and Lossless music. You can check it out at losslessaudiodb.com - enjoy!

ChatGPT as an Intern Developer

Despite being a bit of a no-show at WWDC7, it’s clear that the explosion of LLMs (Large Language Models) such as ChatGPT are already proving to be a foundational shift in how many people work.

I’ve been enjoying the writing of Ethan Mollick at One Useful Thing recently and in particular his article about “On-boarding your AI Intern”:

In fact, perhaps one of the most interesting aspects of our current AI moment is that several billion people just got free interns. They are weird, somewhat alien interns that work infinitely fast and sometimes lie to make you happy, but interns nonetheless.

So, how can you figure out how to best use your intern? Just like any new worker, you are going to have to learn its strengths and weaknesses; you are going to have to learn to train and work with it; and you are going to have to get a sense of where it is useful and where it is just annoying.

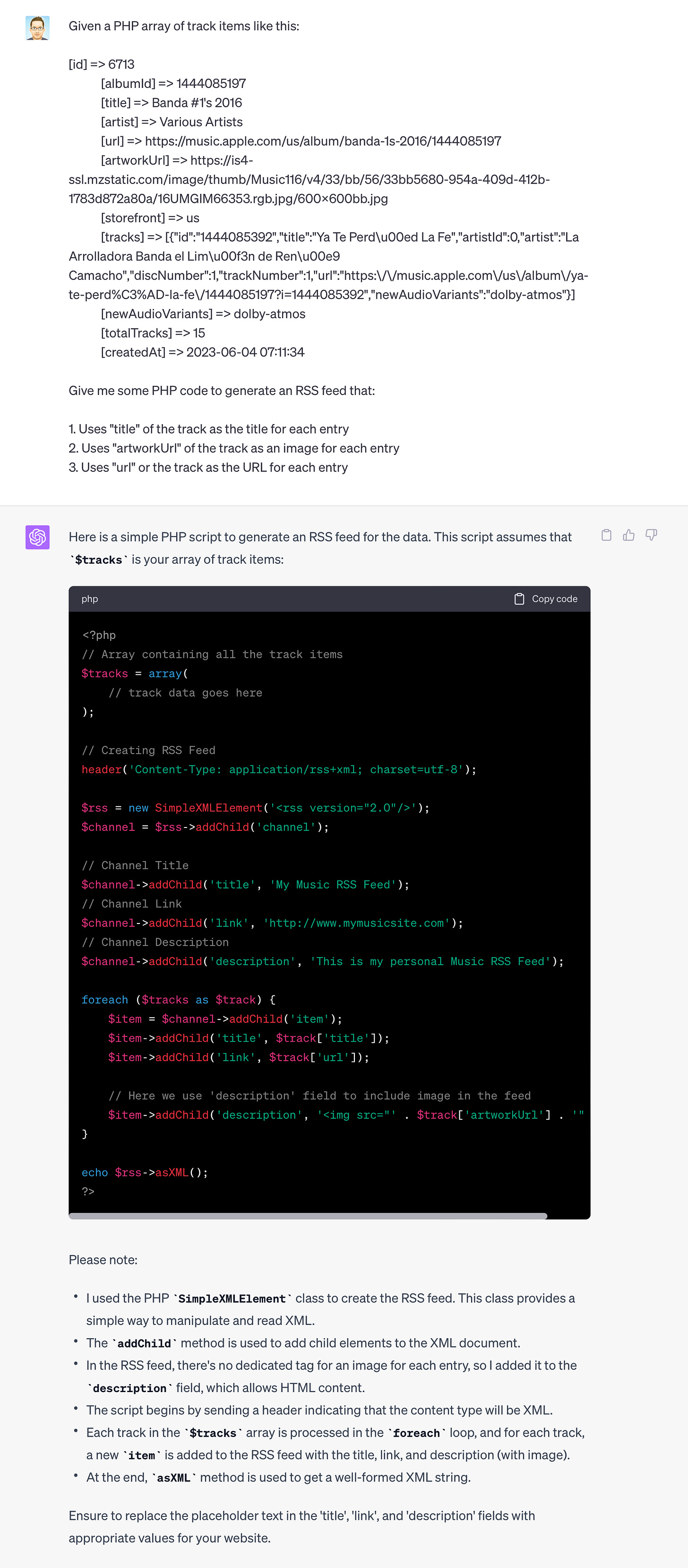

The idea that these new AI chat bots can be seen as fallible interns rather than all-knowing gods that are going to destroy the world certainly seems more grounded in reality to me. To that end, I’ve been playing around with ChatGPT Plus and ended up using it to write some of the exporting code for The Spatial Audio Database.

In the past, when looking to add a feature to a project I’d usually go back through my past work and copy and paste from there. If it was something I hadn’t worked on before, I’d look on Stack Overflow or find some articles or documentation to get an understanding.

With an AI intern, things become a bit easier as you can throw the problem at them in a more specific way. Rather than searching for “php generate rss feed”, I was able to give an example of my data array and ask it to create the code I needed using some specific variables:

Not only does it return well formed code that compiled (complete with in-line comments) it also gave me a set of notes to explain how it works. It also reminded me to change the title, link, and description of the feed as it had given me some random examples inferred from my content.

This worked brilliantly as I was able to get a working RSS feed running in around 20 seconds. Whilst I have some RSS feed code already on my own personal website, that was written in a different language and so it would have taken me a few minutes to restructure my data accordingly. This isn’t something that is saving me days of time, but it is still saving me time.

When I put in my actual data, I received some PHP warnings which I gave back to ChatGPT:

Notice how I formed the request; this isn’t a Google search command but instead a natural question I might have asked a colleague. Again, the “intern” provides an answer, an explanation, and some updated code.

I performed a few more follow up questions, sometimes days later, asking how to add a build time, last published date, and creator information and each time the results came back exactly as I needed them. I then moved onto performing the CSV export and ChatGPT was able to provide code for me to use based on specific data sets I’d provided.

This kind of chatbot relationship is excellent for independent developers. When you have a question about some specific area that you’ve maybe not worked with for a while, it can give you the samples you need. I particularly like the way it can act as a data transformer as you can give it the exact variables to use rather than having to adjust the code to match your project. Coding is the perfect area for a chatbot to work in as often there can only be one right answer; the code compiles, or it doesn’t.

Such a system is not perfect though. An LLM is just a set of glorified if statements and it will tell you things with confidence even if they’re not true. Imagining it as an intern frames in your mind that the answers may not be correct and do actually need checking. That said, it can work really well for doing initial research or simple coding tasks that you don’t want to do yourself.

I’m not comfortable with having ChatGPT write articles for me or to generate high swathes of code but these examples for adding data exports to The Spatial Audio Database have made me realise that I should get some practice with using these new tools. They are undoubtedly the future.

Recommendations

Video Games

The Legend of Zelda: Tears of the Kingdom - It’s good. It’s very, very good. I’ve played around 60 hours since it launched and I’ve barely scratched the surface in what is a huge game filled with things to do. When I say “huge”, it’s around 2-3x the size of Breath of the Wild. That it runs on the Switch is genuinely mind blowing, especially as there are no frame rate or graphical issues. It doesn’t even feel like it’s 30fps (and I consider myself very sensitive to frame rates). Undoubtedly one of the best games of the past 10-15 years. Go and get it played.

TV

Succession - I decided to start watching this just as the show finished 😂 I powered through season 1 in a couple of days and fully intend to do the same with the remaining three seasons. Excellent writing with excellent actors. Kieran Culkin gets a special mention from me for having the best lines (introducing his new girlfriend to his mother towards the end of the series is particularly memorable).

Roadmap

The roadmap is my way of committing to what I’m going to do before the next issue:

11th May - 7th June

Complete the Lossless Audio integration in Music Library Tracker v3 ❌

Get the BoardGameGeek collection sync complete in Board Game Lists ❌

Build something with the new iOS 17 SDK (or RealityOS 👀) ❌

Oof, not a good month in term of achieving my goals but the Twitter API issues meant the Spatial Audio and Lossless Audio databases became a higher priority (and are still useful for Music Library Tracker v3) whilst iOS 17 didn’t have anything to entice me.

For the next issue, I feel like I have some breathing room again to get back to focussing on my apps so let’s try this list again!

8th June - 5th July (Issue #11)

Complete the Lossless Audio integration in Music Library Tracker v3

Get the BoardGameGeek collection sync complete in Board Game Lists

That wraps it up for this issue. I hope you found something of interest and that you’ll be able to recommend the newsletter to your friends, family, and colleagues. You can always comment on this issue or email me directly via ben@bendodson.com

I love that they’ve put a full 3D render of The Steve Jobs Theatre into Keynote so you can practice your presentations on that stage. The seats look lovely 😍

I used this feature on a HTC Vive to find my glass of wine… unfortunately I forgot I had a massive headset on and smashed it into that rather than into my mouth 🤦🏻♂

The iPad was rumoured to be $1000 but then launched at $499. Steve Jobs made a big thing about it during that Keynote.

I haven’t even talked about the fact you can control this thing with your hands and eyes!

Sometimes Sherlocking will kill your entire business (i.e. Sticker Drop only does one thing and that’s now done by the system) but for more complex apps it can be a boon as users will try out the system version but then want to buy an app which adds more features. Task apps didn’t disappear after Reminders came out!

I just want Donald Duck and Goofy to be added to the Mickey and Minnie faces 🙏🏻

Apple is avoiding any big changes to Siri or a “co-pilot” for Xcode but there are still some LLMs in iOS 17. For example, the new autocorrect feature? That’s an LLM.